Last updated: January 2026

A finance employee in Hong Kong joined what appeared to be a routine video call with their CFO and several colleagues to finalize details of a confidential acquisition. After a seemingly legitimate discussion, the employee authorized $25.6 million in wire transfers. Every person on that call — except the victim — was a deepfake.

This attack on engineering firm Arup wasn't an anomaly. It was a warning shot. And by 2026, incidents like this won't be shocking — they'll be expected, unless organizations fundamentally rethink how trust is established in a world where seeing is no longer believing.

TL;DR

Deepfake-enabled fraud losses exceeded $200 million globally in Q1 2025 alone, a figure that likely represents only a fraction of total impact due to underreporting.

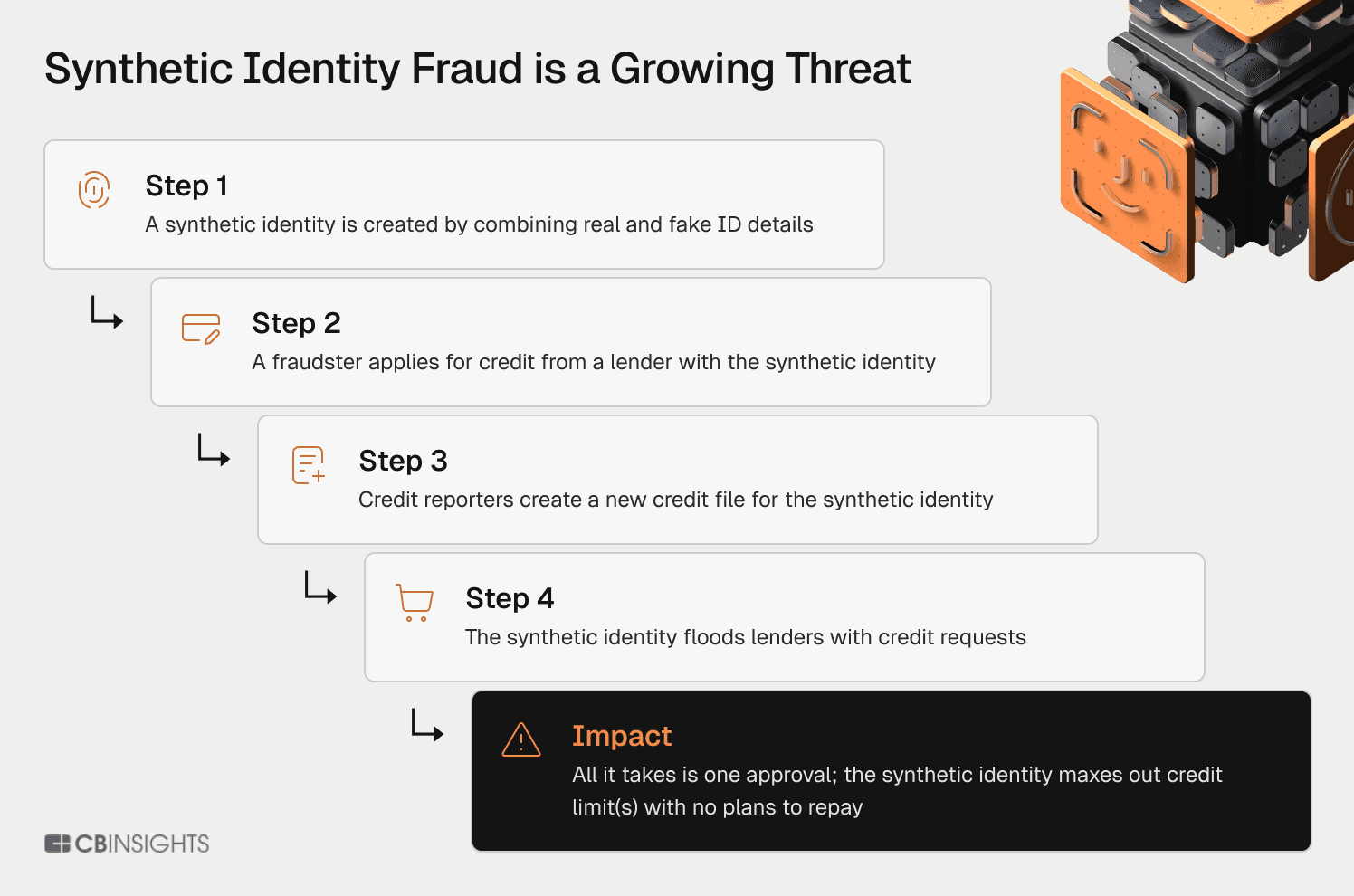

Synthetic identity fraud is now a $30–35 billion annual drain, with the majority of losses concealed within "credit losses" rather than flagged as fraud, allowing fabricated identities to persist undetected until default.

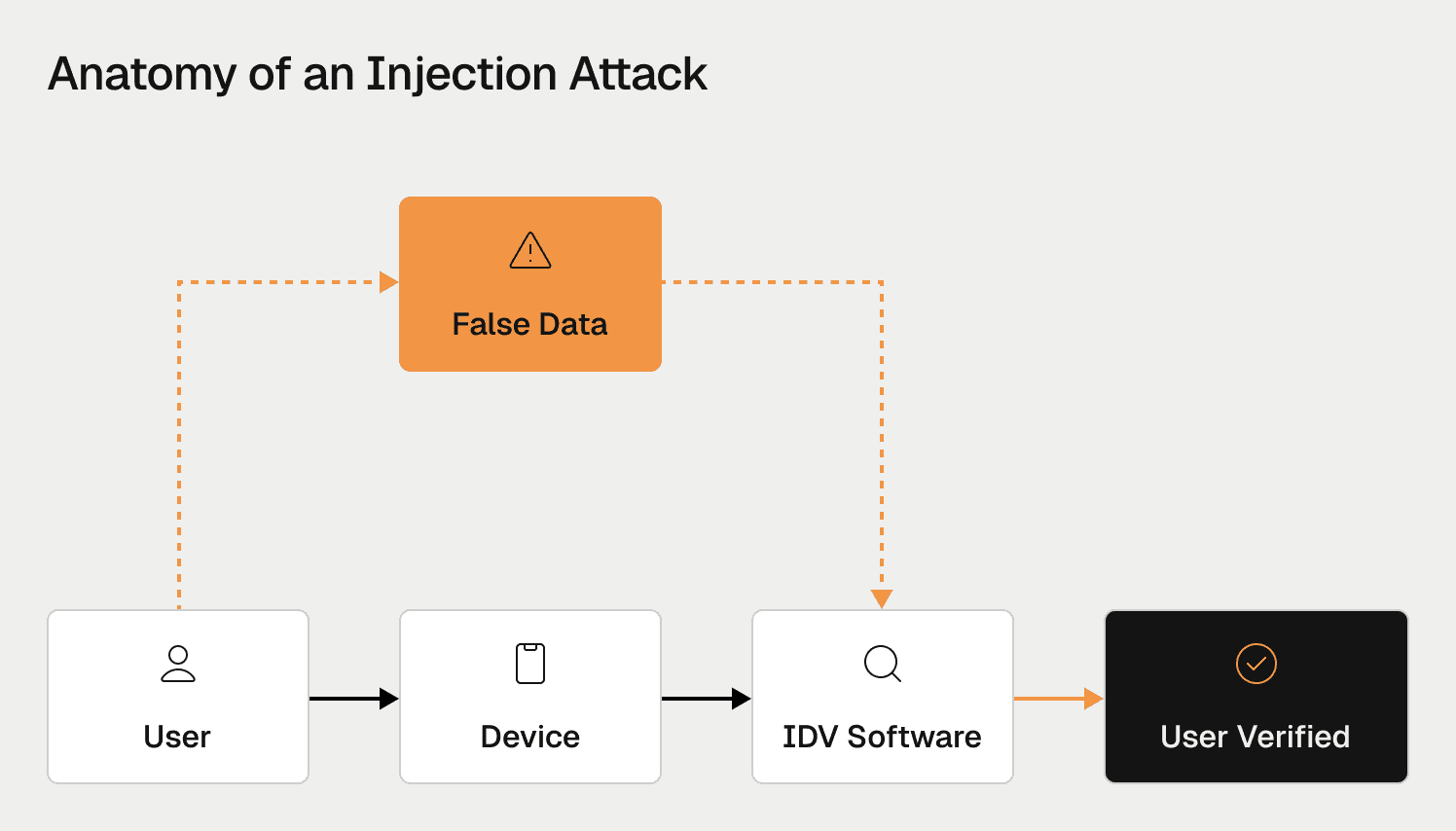

Attackers use injection attacks to bypass video verification by feeding deepfakes directly into data streams, defeating traditional liveness detection entirely.

AI-enabled fraud is projected to reach $40 billion annually in the U.S. by 2027 (~30% CAGR), with Experian calling 2026 a tipping point as 72% of business leaders now rank AI-enabled fraud a top operational challenge.

Effective defense requires unified intelligence that correlates identity, device, behavioral, and transactional signals in real time.

How big is the deepfake fraud problem in 2026?

The published numbers don't capture the real world scale of the problem. Deepfake-enabled fraud rarely gets labeled as such in financial reporting — instead, losses get absorbed into broader categories like authorized push payment fraud, account takeover fraud, or credit defaults. What we see in official figures represents only verified, attributed incidents, not actual impact.

Yet even with systematic undercounting, the acceleration is unmistakable. The World Economic Forum reports more than $200 million in confirmed deepfake-related fraud losses in Q1 2025 alone, describing the trend as a fundamental shift toward direct, scalable attacks on business operations.

The Deloitte Center for Financial Services projects AI-enabled fraud could reach $40 billion annually in the United States by 2027 — a roughly 30% compound annual growth rate. That projection spans deepfakes, synthetic identities, automated social engineering, and AI-driven account takeover, illustrating how deepfakes now function as one component in a broader AI-powered fraud stack.

Detection data shows similar velocity. Signicat reports deepfake fraud attempts have increased by more than 2,000% over the past three years, driven by fraud-as-a-service marketplaces and rapidly improving generative models. Experian's 2026 Future of Fraud Forecast calls this year a clear "tipping point," with 72% of business leaders identifying AI-enabled fraud as a top operational challenge.

The technical barriers continue to collapse. As we detailed in an earlier article, voice cloning now requires just 20-30 seconds of audio, while convincing video deepfakes can be generated in 45 minutes — making high-impact fraud cheaper, faster, and far more scalable than traditional identity attacks.

What makes deepfake attacks so effective against banks?

The threat comes from delivery systems as much as the fakes themselves. Modern attackers bypass identity verification using injection attacks, where synthetic video is fed directly into data streams via virtual camera software or mobile emulators. The bank's application believes it's receiving a live camera feed when it's actually processing a digital fabrication. These attacks defeat liveness detection altogether, working against both passive checks and many active liveness solutions (such as head turns or blinking) when those challenges lack true randomness.

At the same time, deepfake-enabled fraud is scaling through the rise of fraud-as-a-service. Organized groups now sell access to turnkey deepfaking tools and identity fraud infrastructure, dramatically lowering the barrier to entry for attackers. This creates a concentration effect: shared tools, reused assets, and overlapping operators make these networks more visible to graph-based and visual network analysis, enabling defenders to uncover relationships and dismantle entire fraud rings rather than stopping individual attacks.

According to a recent analysis by an identity verification firm, fraud attempts grew 21% year over year, with one in twenty verification attempts now flagged as fraudulent. Attackers increasingly combine deepfakes with phishing, social engineering, and account takeovers to overwhelm traditional controls. This is why solutions like Oscilar's Cognitive Identity Intelligence move beyond static checks, correlating behavior, infrastructure, and network-level signals that synthetic identities — even highly realistic ones — cannot consistently replicate.

Why is synthetic identity fraud the "invisible enemy"?

The Federal Reserve Bank of Boston identifies synthetic identity fraud as the fastest-growing financial crime in America, with losses crossing $35 billion in 2023. Unlike traditional identity theft, synthetic fraud creates "Frankenstein" identities by combining a legitimate Social Security number (often from a child, elderly person, or deceased individual) with fabricated names and dates of birth.

These fabricated personas are incubated for 12-24 months. Fraudsters apply for credit, get rejected (which creates a credit file), then gradually build pristine credit histories. By the time they "bust out" — maxing credit lines and vanishing — the synthetic identity may have a 750+ credit score. Our analysis of generative AI in fraud detection shows bust-out attacks using synthetic identities accounted for $2.9 billion in auto loans and credit cards alone.

The Federal Reserve's Synthetic Identity Fraud Mitigation Toolkit reveals the accounting catastrophe: these losses typically get classified as "credit losses" (bad debt) rather than fraud losses. Banks believe they have an underwriting problem when they actually have a fraud problem, and this misclassification hides billions from risk teams.

But synthetic identities represent only one dimension of the identity crisis. Financial institutions also face persistent challenges from identity mules — real people using genuine documents who establish accounts before fraudsters take over. These mules can pass document verification and selfie checks, particularly when recruited from developing countries where the cost to compromise an individual is low.

Unlike synthetics, mules leave clean paper trails until the moment of takeover, making them nearly invisible to traditional onboarding controls.

How are criminals industrializing deep fake and synthetic identity attacks?

The Arup attack wasn't a lone hacker — it was the product of an industrialized supply chain. The dark web operates as a mature "Fraud-as-a-Service" marketplace. Deepfake generation services cost as little as $15 per video. "Initial Access Brokers" sell credentials to compromised networks, while "Panel Providers" offer subscription-based botnets that make attacks appear to originate from legitimate residential addresses.

Generative AI has turbocharged the threat. The Federal Reserve warns GenAI acts as "an accelerant" — automating identity creation, learning from failures, and optimizing which profiles succeed at specific institutions. As detailed in our analysis of AI agents in fraud and risk management, these agents can navigate banking interfaces, solve CAPTCHAs, and execute thousands of micro-transfers through mule networks in seconds.

The uncomfortable truth: You cannot staff your way out of industrialized, AI-driven fraud. Manual review teams will drown in the volume.

Why do bank defenses keep failing?

Financial institutions organize defenses in silos: KYC teams handle onboarding, fraud teams monitor transactions, AML teams watch for laundering, credit teams manage default risk. Attackers exploit the seams. A synthetic identity passes KYC (documents look legitimate). Credit approves a loan (the score is excellent). The fraud team flags a suspicious device — but since the user is "verified" and "credit approved," the alert gets suppressed.

This siloed approach creates a perverse paradox. Risk and fraud teams, who see fraudulent activity as 100% of their world even though it represents 1-2% of actual user behavior, often design friction-heavy product flows and features that frustrate legitimate customers. Meanwhile, genuinely dangerous attacks slip through because information doesn't flow between defense layers.

The challenge has two dimensions: organizational and temporal. Institutions need to distinguish between the speed of detection and the speed of action. Detecting something suspicious can happen instantly, but the severity of the fraud and the strategic value of observation (such as honeypotting to map fraud networks) determines whether action should be real-time or deliberately delayed. A $50 purchase can go through instantly. A $50,000 luxury watch transaction? Both buyer and seller expect due diligence.

Gartner predicts that in 2026, 30% of enterprises will no longer trust standalone identity verification solutions. The "one-and-done" KYC model is fundamentally broken. This is why Oscilar's agentic AI approach focuses on continuous risk intelligence rather than checkpoint-based security.

Meanwhile, only 22% of financial institutions have implemented AI-based fraud prevention tools, according to identity platform Signicat — a dangerous gap when attackers operate at API speed while defenders work in batch processes. Nearly 60% of companies reported increased fraud losses from 2024 to 2025.

What does effective defense look like in 2026?

By 2026, effective defense depends less on isolated rules and more on unified intelligence. That means bringing identity, device, behavioral, and transaction signals together into a single, real-time decisioning framework, so risk can be assessed holistically rather than piecemeal.

Defending against deepfakes and modern fraud requires depth across four interlocking layers:

Layer 1: Mass Registration Prevention

At the outermost layer, risk teams work with infosec to detect credential stuffing, bot-driven account creation, and credentials for sale after major data breaches. This stops attacks before they reach legitimate verification flows.

Layer 2: Registration and Application Validation

Beyond validating PII, effective controls at this layer include employment and income verification for lending products. For personal loans, auto loans, and mortgages, these checks create friction that's proportional to risk — what we call prudent friction, calibrated to the severity of the event and the precision of detection models.

Layer 3: Post-Registration Behavioral Monitoring

After an account is established, the focus shifts to detecting suspicious behaviors at transaction time. Credit card bust-outs show up in first-purchase patterns. Rideshare fraud manifests in location anomalies, distance traveled, or driving behavior. Marketplace fraud appears as explosive seller growth or delay tactics designed to make buyers think items are in transit.

Layer 4: Account Takeover Defense

Social engineering remains the primary vector for account takeover. Continuous monitoring of session behavior, device switching, and communication patterns can detect when a legitimate account holder has been displaced by a fraudster.

Platforms like Oscilar are designed to support this layered approach by enabling real-time decisions across fraud, credit, and compliance using shared signals and low-latency evaluation — so teams can respond quickly without fragmenting their defenses.

Preventing deepfakes across the attack surface

While deepfakes can appear convincing in isolation, they leave behind a trail of breadcrumbs across the device, session, and application lifecycle.

Preventing deepfakes at the source starts with analyzing the device and interaction used to submit a biometric. Device fingerprinting, emulator and virtual camera detection, and behavioral and cognitive signals (such as micro-tremors in phone handling or hesitation patterns under pressure) can expose synthetic or coerced interactions before a biometric is even captured.

Preventing deepfakes at capture involves leveraging AI-powered liveness and biometric analysis to assess whether what's being presented is truly human. This goes beyond static or deterministic challenges, analyzing biometric artifacts and presentation dynamics for subtle inconsistencies that indicate manipulation or synthesis.

Preventing deepfakes at submission means treating the application as a holistic entity, not a single biometric event. Once data enters the system, Oscilar correlates signals across identity attributes, documents, devices, networks, and behavioral patterns — surfacing discrepancies such as a high-confidence face match paired with an anomalous device, mismatched document metadata, or suspicious transaction context.

Fighting synthetic identities with authoritative data

For synthetic identities, the most powerful foundational control remains the Social Security Administration's eCBSV service, validating whether an SSN actually matches claimed identity data. Oscilar supports eCBSV through trusted identity providers such as Socure, and enriches those results with broader digital footprint analysis — email age, phone carrier history, device reuse, and network associations — allowing financial institutions to distinguish fabricated identities from legitimate thin-file applicants while uncovering repeatable fraud patterns at scale.

Advanced ML models have evolved beyond snapshot-in-time predictions. Recent computational capabilities now allow platforms to combine all user data throughout the entire journey, providing a holistic picture that proves far more effective than isolated decision points. This continuous intelligence model is what separates modern risk platforms from legacy checkpoint systems.

What should financial leaders do right now?

The operational playbook for 2026 demands five immediate actions:

1. Dismantle the silos

If your fraud team cannot see what your KYC team saw, you're blind to converged attacks. Break down organizational barriers between onboarding, transactions, credit, and AML.

2. Audit your "credit loss" bucket

Identify how much bad debt is actually synthetic fraud in disguise. Reclassifying these losses correctly will unlock budget and urgency for proper defenses.

3. Secure the camera pipe

Implement injection attack detection and treat every video feed as untrusted until cryptographically verified. The biometric itself may be perfect — but if the delivery mechanism is compromised, verification is meaningless.

4. Deploy AI-powered defense agents

Autonomous investigation capabilities that scale with automated attacks. These agents can adjudicate whether activity is good or bad, request additional information, and identify similar cases in review queues — allowing human analysts to work an order of magnitude faster.

5. Shift from gatekeeper security to continuous monitoring

Trust must be re-earned every session, every transaction. The industry term emerging is KYX (Know Your Experience), replacing one-time KYC with persistent assurance across the customer lifecycle.

Regulatory and standards pressure is mounting. NACHA's 2026 rules mandate "commercially reasonable" fraud detection for web debit entries. In effect by August 2026, The EU AI Act requires clear deepfake labeling and transparency controls. Buyers should evaluate whether their vendors are meeting emerging technical standards head-on, such as CEN/TS 18099, which specifically addresses biometric data injection attacks.

As initiatives like Visa's Trusted Agent Protocol demonstrate, the future of trust hinges on cryptographic verification of every actor and every data stream across the transaction chain.

Building new infrastructure for trust

The $25.6 million Arup heist demonstrated a brutal new reality: when you cannot trust your eyes and ears, you must trust your data. The convergence of synthetic identities, deepfake injection, and agentic automation has rendered legacy security architectures obsolete.

The technology to fabricate reality exists today. The only defense is a system that sees the invisible patterns behind the fabrication — unified, cognitive, and operating at machine speed. Platforms like Oscilar are building this new trust infrastructure, combining AI risk decisioning with cognitive identity intelligence to detect fraud in real-time.

But the future also demands rethinking the fundamental relationship between identity, intent, and consequence. In an age where AI agents can conduct transactions on behalf of users, many legitimate customers will prioritize privacy and convenience over explicit identity disclosure. The challenge for risk managers isn't to demand perfect identification at every touchpoint but to calibrate risk response based on the severity of the consequence and the confidence of the detection model.

This goes beyond loss prevention. What's at stake is the foundation of the digital economy: trust itself.