Última atualização: janeiro de 2026

Líderes de tecnologia bancária se encontram em um dilema familiar: a McKinsey estima que a IA poderia adicionar de US$ 200 bilhões a US$ 340 bilhões em valor anual ao setor bancário global, equivalente a 9 a 15 por cento dos lucros operacionais. A visão da empresa de consultoria de um Banco do Futuro com IA é atraente: tomadas de decisão em tempo real, agentes autônomos, serviço hiperpersonalizado. No entanto, de acordo com um estudo do MIT de agosto de 2025, 95% dos projetos-piloto de IA empresarial não conseguem gerar impacto financeiro mensurável. A maioria dos bancos está presa no que a indústria passou a chamar de "purgatório de pilotos", realizando dezenas de experimentos isolados que nunca se escalam.

A sabedoria convencional diz que a única saída é a transformação "rasgar e substituir": arrancar o núcleo legado, reconstruir do zero, aceitar ciclos de aquisição de 18 meses e orçamentos de oito dígitos. Mas essa narrativa é tanto paralisante quanto errada.

Uma trajetória diferente existe. Os bancos podem capturar 70-80 por cento do valor potencial da IA focando em um pequeno número de subdomínios de alto impacto e implantando infraestrutura moderna de IA ao lado de sistemas legados — em vez de tentar substituir núcleos durante vários anos.

Este artigo examina essa trajetória. Baseia-se no framework de Banco do Futuro com IA da McKinsey & Company, que posiciona a IA como infraestrutura horizontal abrangendo envolvimento do cliente, tomadas de decisão, tecnologia central e modelos operacionais. Considerando esse plano como dado, o foco aqui é a execução: como os bancos podem operacionalizar essa visão sob o rigor regulatório, as restrições de sistemas legados e os requisitos de gestão de risco.

Praticantes com experiência profunda em construir sistemas de dados em tempo real dentro de grandes instituições financeiras observam consistentemente a mesma lacuna: enquanto os bancos investiam pesadamente em mover dados mais rapidamente, eles falharam em modernizar como as decisões de risco são tomadas depois que esses dados chegam. O resultado é uma proliferação de ferramentas isoladas, pipelines frágeis e processos humanos lentos — exatamente no momento em que os fraudadores estão coordenando ataques em todo o percurso do cliente.

TL;DR

Bancos estão presos no “purgatório de pilotos.” A adoção de IA paralisa porque a tomada de decisão de risco permanece isolada, a aquisição é lenta e os modelos de governança foram construídos para sistemas determinísticos (não agênticos).

Não é necessário substituição do núcleo. Os bancos podem capturar 70–80% do valor da IA sobrepondo uma plataforma de decisão em tempo real e unificada sobre sistemas legados.

O retorno mais rápido está na camada de decisão. Casos de uso de Fraude, AML, crédito e conformidade — especialmente no modo sombra, agentes de assistência a analistas e sobreposições de AML — oferecem ganhos rápidos e de baixo risco.

O progresso vem de “fatias tratáveis.” Implantações pequenas e contidas rodando junto a sistemas existentes permitem que os bancos provem valor, satisfaçam a gestão de risco de modelos e escalem com segurança.

Governança acelera a adoção. Explicabilidade, controles humano-no-loop, trilhas de auditoria e torres de controle de IA permitem implantações mais rápidas mantendo a conformidade.

Parte I: O Contexto Estratégico

Por que os sistemas legados não acompanham o ritmo

O típico banco de Nível 1 opera em uma infraestrutura projetada para uma era diferente. Dados de clientes estão em silos fragmentados: bancos de dados separados para cartões de crédito, hipotecas, poupanças e gestão de patrimônio. Construir uma visão em tempo real e de 360 graus de qualquer cliente requer conectar sistemas que nunca foram feitos para se comunicar. A lógica de tomada de decisão geralmente está codificada em mainframes ou bloqueada em "caixas pretas" de fornecedores, tornando até mesmo mudanças de regras simples um exercício de engenharia de várias semanas. Na prática, isso muitas vezes significa que um cliente pode falhar na verificação de identidade durante o onboarding e ainda ser aprovado para crédito ou pagamentos instantâneos minutos depois — simplesmente porque esses sistemas nunca compartilham sinais.

Essa arquitetura foi otimizada para estabilidade em um mundo onde a fraude se movia em velocidade humana. Atualmente, atacantes examinam fluxos de onboarding, testam trilhos de pagamento e exploram sistemas de crédito em sequências coordenadas — muitas vezes em minutos. O Serviço FedNow, que agora conta com mais de 1.400 instituições financeiras participantes, e trilhos de stablecoins exigem liquidação instantânea. Fraudadores usam IA generativa para lançar ataques em velocidade de máquina. Clientes esperam a mesma capacidade de resposta do banco que obtêm de seus serviços de streaming. Um sistema que atualiza durante a noite não pode se defender de ameaças que evoluem em minutos.

Quando sistemas de risco operam de forma independente, cada um vê apenas um fragmento do ataque. O que parece inócuo isoladamente torna-se óbvio apenas quando sinais são avaliados juntos e em tempo real.

O plano da McKinsey

O framework Banco do Futuro com IA da McKinsey propõe tratar a IA não como um conjunto de casos de uso isolados, mas como infraestrutura horizontal que se estende por quatro camadas da organização: Envolvimento, Tomada de Decisão, Tecnologia e Dados Centrais e Modelo Operacional.

O framework é direcionalmente correto. O desafio que os bancos enfrentam não é entender o destino, mas navegar as restrições — aprovação regulatória, gestão de risco de modelos, integração de legados e segurança operacional — necessárias para alcançá-lo.

A Camada de Envolvimento trata das interfaces voltadas para o cliente: interações multimodais através de voz, texto e imagem, apoiadas por "gêmeos digitais" que simulam o comportamento do cliente e permitem um serviço personalizado e proativo. A camada de decisão é onde os dados se tornam ação: agentes de IA e copilotos realizam raciocínios em tempo real, orquestram processos complexos, e alcançam o que a McKinsey projeta como ganhos de produtividade de 20 a 60 por cento. A Camada de Tecnologia e Dados Centrais fornece a base: arquiteturas de dados unificadas que quebram silos, bases de dados vetoriais que permitem busca semântica em dados não estruturados, e pipelines LLM para gerenciar ciclos de vida de modelos. A Camada de Modelo Operacional organiza os humanos: equipes multifuncionais, "torres de controle" de IA para governança, e estruturas de responsabilidade focadas em resultados ao invés de conclusão de projetos.

O framework é coerente. O problema é a execução.

Por que os bancos congelam

Os líderes bancários enfrentam três barreiras estruturais quando tentam mover-se da visão para a implementação. Uma pesquisa da BCG encontrou que apenas 25% das instituições integraram capacidades de IA em seu playbook estratégico — os outros 75% permanecem presos em pilotos isolados e provas de conceito.

A primeira barreira é a aquisição. A frase “infraestrutura de IA” frequentemente desencadeia ciclos de RFP de 18 meses envolvendo aquisição, jurídico e conformidade. Em um cenário tecnológico que evolui tão rapidamente, as soluções selecionadas no início desses processos podem estar obsoletas no momento da implantação. Muitas instituições tentam compensar construindo pipelines personalizados internamente — montando complexos stacks de streaming, regras e análises que exigem grandes equipes de engenharia apenas para manter o funcionamento. Para a maioria dos bancos, essa abordagem é economicamente insustentável.

O segundo é a governança. Estruturas de Gestão de Risco de Modelos foram projetadas para modelos estáticos e determinísticos. Sistemas generativos e agênticos introduzem comportamentos probabilísticos que devem ser governados continuamente — por meio de explicabilidade, linhagem, e monitoramento de desempenho ao vivo — ao invés de por meio de aprovações únicas.

O terceiro é o controle. Os bancos são corretamente céticos sobre discursos de "transformação" que requerem terceirização da lógica de tomada de decisão para terceiros. Suas políticas de risco e insights de clientes são propriedade intelectual. Ceder o controle dessa lógica para fornecedores inverte a proposta de valor.

Essas barreiras explicam a lacuna entre entusiasmo e ação. A resolução está em reformular a tarefa: não como uma transformação em massa, mas como uma série de implantações contidas que provam valor e constroem capacidade institucional.

A escala da transformação à frente

As apostas estão se tornando mais claras. Um relatório do Citigroup apontou que 54% dos empregos no setor bancário têm alto potencial de automação, com outros 12% que poderiam ser aumentados pela IA. Segundo a Bloomberg Intelligence, bancos globais cortarão até 200.000 empregos nos próximos três a cinco anos conforme a IA invade tarefas atualmente realizadas por trabalhadores humanos. O DBS Bank de Singapura já anunciou planos de reduzir sua força de trabalho em 4.000 posições ao longo de três anos conforme a IA assume funções, ao mesmo tempo em que implanta mais de 800 modelos de IA em 350 casos de uso.

No entanto, a pesquisa da Accenture sugere que a oportunidade supera a disrupção: a produtividade pode aumentar de 20 a 30 por cento e a receita em 6 por cento para bancos que implementam de maneira eficaz. A questão não é se transformar ou não, mas como fazê-lo sem destruir a estabilidade operacional.

Parte II: Cinco Fatias Tratáveis

O framework da McKinsey define como é um banco de IA. O que não prescreve é como as instituições regulamentadas podem avançar em direção a esse futuro de forma incremental — sem expor clientes, reguladores ou balanços a riscos inaceitáveis. As seguintes “fatia tratáveis” refletem padrões de execução observados em implantações ao vivo onde bancos provaram valor primeiro, depois escalaram com segurança.

As seguintes “fatia tratáveis” são padrões de execução observados em implantações ao vivo que permitem que os bancos avancem em direção ao Banco do Futuro com IA incrementalmente.

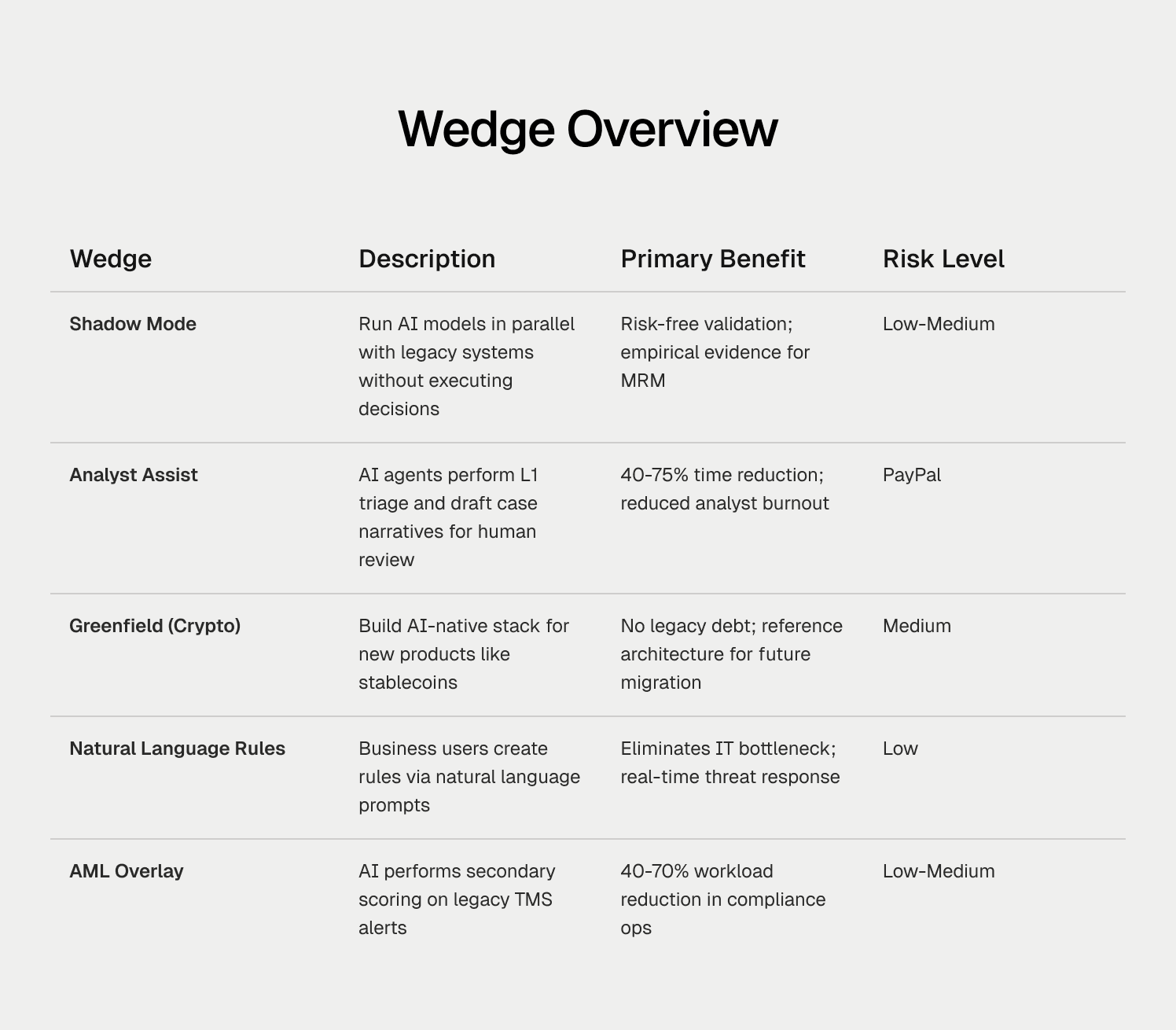

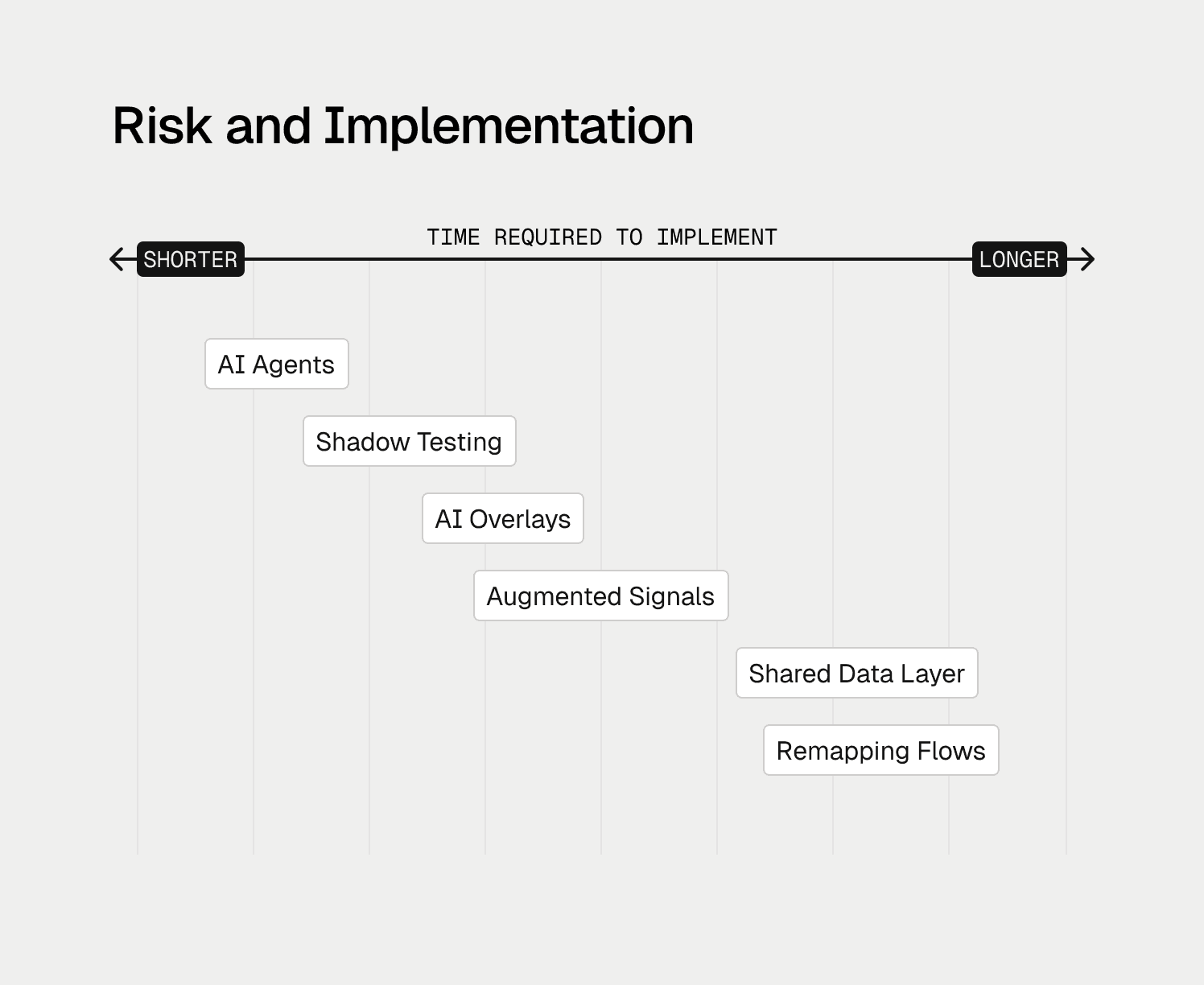

Uma fatia é uma implementação direcionada que introduz infraestrutura moderna de IA ao lado de sistemas legados sem exigir substituição em massa. As cinco fatias a seguir oferecem perfis de risco variados e aplicabilidade imediata para líderes de risco e conformidade.

Fatia 1: Tomada de decisão em modo sombra

O problema: Os bancos hesitam em implantar novos modelos de IA diretamente na produção porque o custo do erro é alto. Um falso positivo bloqueia um cliente legítimo. Um falso negativo permite fraude. Qualquer desfecho aciona escrutínio regulatório e perda de clientes.

A solução: O modo sombra executa um novo motor de decisão de IA em paralelo com o sistema legado. Ambos os sistemas recebem os mesmos dados de produção ao vivo. Ambos tomam decisões. Apenas as decisões do sistema legado são executadas. As decisões do sistema de IA são registradas para comparação.

Como funciona: Fluxos de dados de transações e clientes são duplicados, muitas vezes via gateways de API ou plataformas de streaming de eventos como o Kafka. Um fluxo alimenta o motor de regras legado; o outro alimenta a plataforma de IA. Analistas de risco comparam decisões com resultados reais. Quando o sistema de IA identifica um anel de fraude que o sistema legado perdeu, isso é evidência de "alfa". Quando o sistema de IA teria bloqueado um cliente legítimo, isso é uma oportunidade de ajuste.

Por que funciona: O modo sombra é essencialmente um teste de retorno e avanço em dados ao vivo com risco zero de produção. Ele cria o registro empírico que os comitês de Gestão de Risco de Modelos exigem. Uma vez que o sistema de IA supera consistentemente o legado, o banco pode virar a chave ou aumentar gradualmente o tráfego via implantação de teste canário. Mais importante, ele permite validação contínua. As equipes de risco podem medir falsos positivos, falsos negativos e deriva ao longo do tempo — alinhando-se muito mais de perto com a maneira como os reguladores esperam cada vez mais que os sistemas de IA sejam governados.

Nível de risco: Baixo. Sem impacto no cliente até que o banco decida agir com base nas evidências.

Fatia 2: Agentes de assistência a analistas (Triagem Nível 1)

O problema: Equipes de risco e conformidade gastam a maior parte de seu tempo em trabalho mecânico — coletando dados, trocando painéis e descartando falsos positivos óbvios em vez de exercitar julgamento. Sistemas legados de monitoramento de transações geram taxas de falsos positivos superiores a 90 a 95 por cento. Os custos de conformidade global com AML agora excedem US$ 274 bilhões anualmente, com grande parte disso indo para o tratamento de alertas de baixa qualidade em vez de capturar criminosos. Analistas humanos passam a maior parte do seu tempo na triagem de Nível 1: coletando dados, copiando entre telas, descartando falsos alarmes óbvios.

A solução: Implantar agentes de IA para realizar a coleta de dados e análise preliminar. O agente não substitui o analista; ele monta o arquivo do caso.

Como funciona: Quando um alerta é acionado, um "Agente de Investigação" consulta automaticamente bancos de dados internos, listas de observação externas e fontes abertas relevantes. Ele sintetiza descobertas em uma narrativa de caso em linguagem natural que explica por que o alerta foi disparado e recomenda uma disposição. O analista revisa o trabalho produzido montado em vez de começar de uma tela em branco.

Por que funciona: Estudos de caso sugerem que essa abordagem reduz o tempo de revisão manual em 75% e permite que analistas juniores desempenhem em níveis seniores. O humano no loop permanece para julgamento final, o que mantém a governança ao mesmo tempo que desbloqueia a eficiência. As implantações mais eficazes tratam a IA como uma camada de incremento. Os agentes reúnem contexto, classificam alertas e elaboram narrativas — mas os humanos mantêm a autoridade decisória, apoiados por trilhas de auditoria completas e razões explicáveis.

Nível de risco: Baixo a Médio. Os humanos mantêm a autoridade decisória; a IA lida com a agregação de dados.

Fatia 3: Produtos de espaços em branco (stablecoins e criptomoedas)

O problema: Bancos entrando em stablecoins, depósitos tokenizados ou custódia cripto enfrentam uma incompatibilidade de tempo. Esses ativos operam em trilhos de blockchain que funcionam 24/7 com liquidação instantânea. A Lei GENIUS, assinada em lei em julho de 2025, estabeleceu o primeiro quadro regulatório federal para stablecoins de pagamento, exigindo que as agências bancárias federais adotem regras abrangentes até julho de 2026. Sistemas de risco tradicionais projetados para liquidação T+2 não podem gerenciar risco na velocidade da blockchain.

A solução: Construir um stack de risco nativo de IA especificamente para novas linhas de produtos. Como estas são implantações em espaços em branco, não há sistema legado a ser substituído.

Como funciona: Implementar uma plataforma capaz de uma tomada de decisão de sub-100ms para corresponder à velocidade da blockchain. Ingerir tanto dados fiduciários tradicionais (KYC, transferências bancárias) quanto dados on-chain (endereços de carteira, gráficos de transações) para detectar redes de lavagem de dinheiro que atravessam ambos os mundos. Usar agentes de IA para aplicar regras automaticamente, como bloquear transferências para endereços de carteira sancionados em tempo real.

Por que funciona: Novos produtos oferecem "segurança por design." O sucesso cria uma arquitetura de referência e expertise interna que constrói o caso para migrar linhas de negócios legadas para uma infraestrutura moderna.

Nível de risco: Médio. Risco de novo produto, mas sem risco de migração legada.

Fatia 4: Geração de regras em linguagem natural

O problema: Em bancos tradicionais, mudar uma regra de risco requer que um oficial de risco documente a lógica, a entregue para TI, espere por um sprint de desenvolvimento e, em seguida, espere por testes. A camada de tradução entre intenção de negócios e código leva dias ou semanas. Fraudadores mudam de estratégia em minutos.

A solução: Plataformas modernas de IA permitem que usuários não técnicos criem e testem regras usando linguagem natural. Um oficial de risco digita: "Sinalizar todas as transações acima de $5.000 de endereços IP em jurisdições de alto risco se a conta tiver menos de 30 dias." A IA traduz isso em lógica executável.

Como funciona: O oficial de risco insere a intenção de política por meio de uma interface de bate-papo. O agente de IA gerador traduz o prompt em código de decisão. O agente executa imediatamente uma simulação (usando o modo sombra) para mostrar o impacto em dados históricos: "Esta regra teria captado 50 casos de fraude, mas acionado 200 falsos positivos na semana passada." Uma vez validada, a regra é implantada em produção sujeita à aprovação de governança.

Por que funciona: Eliminar o gargalo de TI permite que as equipes de risco respondam a ameaças em tempo real. As equipes de negócios ganham controle direto sobre suas ferramentas enquanto permanecem dentro de estruturas de governança.

Nível de risco: Baixo com governança adequada. A simulação evita a implantação de regras mal ajustadas.

Fatia 5: Redução de falsos positivos em AML

O problema: O combate à lavagem de dinheiro (AML) é muitas vezes a função de conformidade mais cara e menos eficiente. Sistemas de monitoramento de transações baseados em regras ("sinalizar qualquer depósito em dinheiro acima de $10k") geram filas maciças de alertas com taxas de falsos positivos de 95 a 98 por cento.

A solução: Implantar uma "sobreposição" de IA que realiza pontuação secundária em alertas do sistema legado. O Sistema de Monitoramento de Transações central permanece intacto.

Como funciona: O sistema TMS legado gera alertas com base em regras regulatórias. Estes alertas passam para um agente de IA que analisa milhares de recursos adicionais: padrões de comportamento, links de rede, IDs de dispositivos. A IA identifica alertas que são claramente falsos positivos e os fecha automaticamente com justificativa documentada para auditoria. Apenas alertas de alto risco chegam aos investigadores humanos. O impacto vem menos de algoritmos novos do que de contexto. Quando sinais de onboarding, comportamento, transação e rede são avaliados juntos, padrões que antes eram invisíveis tornam-se óbvios.

Por que funciona: Puro aumento sem substituição de núcleo. Referências da indústria sugerem redução de 40 a 70 por cento na carga de trabalho manual. O cálculo de ROI é simples o suficiente para sobreviver ao escrutínio do CFO.

Nível de risco: Baixo a Médio. Requer documentação em nível de auditoria da justificativa da supressão.

Parte III: Evidências do campo

No último década, instituições financeiras investiram pesadamente em infraestrutura de dados em tempo real — streaming de eventos, APIs e pipelines mais rápidos. No entanto, muitas vezes encontraram a mesma restrição: embora os dados chegassem cada vez mais em tempo real, as decisões de risco muitas vezes não.

Equipes acumularam sistemas fragmentados, cada um otimizado em isolamento. Algumas tentaram construir camadas de tomada de decisão personalizadas sobre stacks de dados modernos, apenas para descobrir que manter acurácia, explicabilidade e conformidade em escala exigia muito mais esforço de engenharia do que o esperado.

Isso não foi exclusivo dos bancos. Fintechs enfrentaram fragmentação semelhante e taxas de falsos positivos. A diferença residia nas restrições operacionais. As fintechs muitas vezes eram forçadas a iterar rapidamente porque a ineficiência aparecia imediatamente como perda ou fricção com o cliente. Os bancos se moveram sob requisitos mais rigorosos de estabilidade, auditabilidade e confiança regulatória.

O que emerge é uma imagem mais clara de onde a IA entrega valor confiável hoje: back-end de decisão — detecção de fraudes, triagem de AML e processamento de documentos. Isso produz resultados mensuráveis porque saídas podem ser verificadas, revisadas e continuamente melhoradas. Em contraste, sistemas de IA que enfrentam diretamente os clientes ou tomam decisões financeiras irreversíveis exigem maior cautela e controles mais fortes.

Os exemplos abaixo refletem o que observamos por meio de implantações da plataforma de decisão de risco de IA do Oscilar, ilustrando como instituições aplicaram essas lições na prática — adotando a decisão unificada e a supervisão humana incrementalmente para capturar os benefícios da agilidade sem assumir risco operacional ou regulatório adicional.

MoneyGram, SoFi e Nuvei: Consolidando decisões sob governança

MoneyGram, SoFi e Nuvei ilustram uma restrição comum em escala: mesmo com infraestrutura moderna de dados, a tomada de decisão de risco muitas vezes fica atrás da velocidade do movimento do dinheiro.

A MoneyGram opera uma rede global de pagamentos abrangendo milhares de corredores e jurisdições. À medida que se expandiu para liquidação instantânea e ativos digitais, sistemas de risco orientados a lotes tornaram-se cada vez mais difíceis de adaptar.

Em vez de substituir sistemas a jusante, a MoneyGram consolidou decisões de fraude, AML e integração em uma única camada de decisão. Novas regras e modelos foram avaliados em modo sombra junto com controles existentes, permitindo que as equipes medissem o impacto em dados ao vivo antes de qualquer mudança de produção. Isso possibilitou a tomada de decisões em tempo real adequada para iniciativas de stablecoin enquanto preservava a disciplina operacional. As equipes relataram até 70% de redução no tempo de migração de dados e a capacidade de evoluir logicamente o risco continuamente sem interromper os processos de produção.

A SoFi encontrou uma dinâmica semelhante a partir de um ponto de partida diferente. Operando em áreas de empréstimos, fraude e cobrança, as mudanças de política muitas vezes eram bloqueadas por filas de engenharia e ferramentas fragmentadas. Ao centralizar a lógica de decisão e permitir experimentação governada, a SoFi reduziu o tempo para o mercado em novas estratégias de risco em cerca de 50% e melhorou a velocidade de processamento em mais de 30%, enquanto mantinha uma supervisão consistente em produtos.

Em ambientes de pagamentos como o da Nuvei, a mesma arquitetura suporta decisões de risco em linha sob restrições rígidas de latência, onde o custo do erro é imediato — seja como perda por fraude ou fricção com o cliente — tornando o teste em modo sombra e caminhos de escalonamento claros essenciais. Como Daniel Hough, Diretor de Risco e Subscrição na Nuvei, observou: “Nossa solução anterior simplesmente não oferecia a funcionalidade voltada para o futuro de que precisávamos: IA, automação ou ferramentas para fazer recomendações complexas além dos conjuntos básicos de regras. A Oscilar nos dá a flexibilidade e inteligência para gerenciar nosso portfólio de maneiras completamente novas, e isso é um grande negócio para nós.”

A lição: unificar a tomada de decisão, validar a precisão com humanos no loop e, em seguida, expandir o escopo.

Flexcar, Dibsy e Fluz: Reduzindo falsos positivos por meio de contexto compartilhado

Em implantações com Flexcar, Dibsy e Fluz, surgiram problemas semelhantes no início: altos falsos positivos, backlog de revisão manual e sinais de risco desconectados. As melhorias vieram de avaliar sinais juntos e apertar ciclos de feedback, mantendo a responsabilidade humana.

A Flexcar reduziu pela metade as taxas de risco e cortou as perdas de ativos para zero coordenando checagens de identidade, comportamento e transações dentro de um único fluxo de decisão, com revisores humanos supervisionando casos de borda.

Dibsy alcançou uma redução de ~80% em fraudes enquanto acelerava em cinco vezes o onboarding de comerciantes, avaliando o risco de onboarding e transação de forma holística em vez de através de ferramentas separadas.

Fluz reduziu revisões manuais em cerca de 90% e aumentou as taxas de aprovação em cerca de 20%, transferindo triagem de alertas e coleta de contexto para fluxos de trabalho automatizados, enquanto mantinha decisões finais com revisores humanos.

Esses resultados refletem onde a IA funciona melhor: decisão de back-end e suporte a analistas, onde os resultados podem ser medidos, auditados e melhorados continuamente.

Clara, Cashco e Parker: Capacitando equipes de risco sem dependência de engenharia

Em operações de subscrição e conformidade, atrasos são muitas vezes impulsionados menos pela qualidade do modelo do que por longas filas de engenharia. Mudanças na lógica de decisão podem levar semanas, limitando a capacidade de resposta às condições de mercado.

Implantações com Clara, Cashco e Parker mostram como essa restrição pode ser abordada sem sacrificar o controle. Ao permitir que as equipes de risco configurem, testem e iterem na lógica de decisão diretamente — dentro de limites governados e com trilhas de auditoria completas — as organizações encurtaram significativamente os ciclos de iteração.

A Clara relatou 3x mais rapidez no onboarding, 3-4x maior throughput sem contar de pessoal adicional, e desempenho consistente de SLA sob crescimento. Cashco e Parker viram efeitos semelhantes: implantações de underwriting em dias em vez de semanas, reduções de backlog de ~70%, e tempos de processamento ~40% mais rápidos.

Para os bancos, esse padrão reduz a dependência de recursos de engenharia escassos, enquanto preserva a explicabilidade e o controle.

TransPecos Banks: Modernização incremental sob supervisão regulatória

O TransPecos Banks, um banco comunitário centenário que apoia vários parceiros de Banking-as-a-Service, enfrentou crescente complexidade de AML sem a capacidade de aumentar a equipe ou a exposição regulatória.

Em vez de substituir sistemas centrais, o TransPecos centralizou a tomada de decisão de AML e o gerenciamento de casos, mantendo controles existentes. Triagem de alertas, investigações e preparação de SAR foram unificados, com humanos mantendo a autoridade final em todas as decisões de arquivamento.

O impacto operacional incluiu:

Redução de 40% nos custos operacionais de AML

Redução de 70% no tempo de revisão de alertas

Redução de 80% no tempo de gerenciamento de SAR

Economia anual projetada de mais de $3M

Igualmente importante foi o impacto durante exames regulatórios. As equipes demonstraram trilhas de auditoria e linhagem completas — desde alertas até arquivamentos — dentro de um único sistema, eliminando a reconciliação retroativa em várias ferramentas.

Um examinador observou: "Este é o controle de gerenciamento de risco mais claro que vimos de um banco do seu tamanho." Quando a IA realiza trabalho mecânico com documentação completa e os humanos retêm o julgamento em decisões críticas, a conformidade torna-se mais defensável, não mais complexa.

O que essas implantações mostram

Em redes globais, fintechs e bancos comunitários, padrões consistentes emergem:

A IA entrega valor imediato em detecção de fraudes, triagem de AML e processamento de documentos — onde as saídas podem ser verificadas e revisadas

Os maiores retornos aparecem na camada de decisão, não na automação voltada para o cliente

O progresso seguro vem do modo sombra, resultados mensuráveis e controles humano-no-loop

A decisão unificada reduz falsos positivos e carga operacional sem aumentar o risco

A linha divisória não é entre bancos e fintechs, ou entre velocidade e segurança. É entre organizações que tratam a decisão impulsionada por IA como um sistema de aprendizado governado embutido na infraestrutura de decisão e aquelas que a implantam como uma coleção de ferramentas desconectadas.

Os bancos podem adotar os mesmos princípios arquiteturais — decisão unificada, ciclos de feedback rápidos e responsabilidade humana clara — enquanto os implementam de forma incremental sob supervisão regulatória total.

Em operações de risco regulamentadas, a iteração disciplinada com explicabilidade e auditabilidade constantemente supera a experimentação sem guarda-corpos.

Parte IV: Governança como facilitadora

Em domínios regulamentados, a governança não é uma restrição à adoção da IA — é o mecanismo que torna a adoção possível. Sistemas de IA que influenciam resultados de crédito, fraude ou conformidade devem ser explicáveis, auditáveis e monitorados continuamente. Instituições que tratam a governança como infraestrutura viva, em vez de documentação estática, movem-se consistentemente mais rápido do que os concorrentes.

Ecoando a ênfase da McKinsey em modelos operacionais de plataforma e governança centralizada, os bancos devem estabelecer uma Torre de Controle de IA. Para agentes de IA operarem em produção, especialmente em domínios regulamentados como crédito e AML, eles devem ser explicáveis. Cada decisão requer uma justificativa clara e legível por humanos. O Ato de IA da UE, que entrou em vigor em agosto de 2024 com aplicação completa esperada para agosto de 2026, classifica sistemas de IA usados para pontuação de crédito como "alto risco" e introduz salvaguardas adicionais. A Autoridade Bancária Europeia descobriu nenhuma contradição significativa entre o Ato de IA e a legislação bancária existente, sugerindo que frameworks existentes podem acomodar IA com esforço de integração.

A Torre de Controle atua como um controle de tráfego aéreo para a IA do banco — monitorando desempenho, viés e deriva em tempo real; impondo apetite por risco e requisitos regulatórios; e garantindo que inovações bem-sucedidas escalem em toda a empresa.

A supervisão eficaz requer mais do que comitês de aprovação. Exige monitoramento em tempo real de precisão, deriva e taxas de substituição humana — para que problemas sejam detectados imediatamente, não meses depois, durante auditorias.

A fase de execução começou

A paralisia que domina muitos líderes de tecnologia e risco bancário vem de um equívoco: que a modernização requer um perigoso rip-and-replace do núcleo. As evidências apontam para um caminho diferente.

O framework do Banco do Futuro com IA da McKinsey identifica corretamente o estado final arquitetônico; as fatias delineadas aqui mostram como os bancos podem alcançar esse estado de maneira pragmática — sem interromper as operações ou ceder o controle da lógica de decisão. Os bancos podem sobrepor infraestrutura inteligente e agêntica sobre núcleos legados hoje. Comece com o modo sombra para provar a segurança. Implante agentes de assistência a analistas para aliviar a pressão operacional imediata. Aproveite oportunidades de espaços em branco como stablecoins para construir stacks de risco nativos de nuvem do zero.

O playbook para líderes de risco: Possuir a infraestrutura em vez de terceirizar a lógica de decisão para soluções pontuais. Comece com a camada de decisão, onde aumentos de produtividade de 20 a 60 por cento e reduções de risco imediatas são encontradas. Reestruture domínios inteiros de ponta a ponta em vez de testar ferramentas isoladas. Exija agência de sua IA: sistemas que planejam, roteiam e executam, não apenas chatbots que recuperam informações. Use modo sombra e ferramentas de linguagem natural para acelerar a inovação, mantendo a supervisão regulatória.

De acordo com a pesquisa do BCG, agentes de IA já representam 17% do valor total da IA em 2025 em todos os setores, projetado para atingir 29% em 2028. Bancos que executarem isso não serão apenas mais rápidos. Serão instituições fundamentalmente diferentes: capazes de raciocinar em tempo real, adaptar-se instantaneamente a novas ameaças e servir clientes com um nível de personalização e segurança que sistemas de processamento em lote não podem igualar.

Nos próximos cinco anos, a linha divisória não será entre bancos que “usam IA” e aqueles que não usam. Será entre instituições que tratam a decisão impulsionada por IA como infraestrutura central e aquelas que continuam a adicioná-la nas bordas.

O plano existe. As fatias estão disponíveis. A questão não é mais se a IA transformará o setor bancário, mas quais instituições serão transformadas primeiro. Bancos que não conseguirem raciocinar e agir em tempo real em toda a jornada do cliente não apenas ficarão para trás, eles correm o risco de se tornarem operacionalmente irrelevantes.

Apêndice: Guia de seleção de fatias